A major cybersecurity concern has emerged after researchers from OX Security reported the discovery of malicious Google Chrome extensions that secretly steal user data from popular AI services such as ChatGPT and DeepSeek. According to the findings, these extensions have been downloaded by a combined total of more than 900,000 users, making the incident one of the most widespread AI-related data leaks to date.

The investigation identified two primary malicious extensions. The first, titled Chat GPT for Chrome with GPT-5, Claude Sonnet & DeepSeek AI, reportedly had around 600,000 active users. The second, AI Sidebar with Deepseek, ChatGPT, Claude and more, accounted for an additional 300,000 users. What makes the situation particularly alarming is that the first extension had received a Featured recommendation badge from Google, leading many users to believe it was safe and trustworthy.

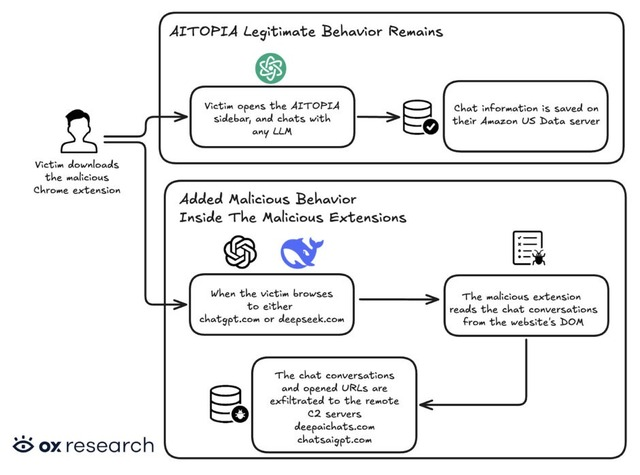

According to OX Security, the attackers created these extensions by closely imitating legitimate AI productivity tools such as AITOPIA, which allows users to access multiple AI services through a convenient browser sidebar. While the core functionality appeared identical, malicious code was secretly embedded into the extensions. Once installed, the extensions monitored user activity on ChatGPT and DeepSeek pages, copying both user prompts and AI-generated responses before transmitting the collected data to a command-and-control server every 30 minutes.

The scope of the data theft extended far beyond AI conversations. Researchers confirmed that the extensions also harvested users’ browsing histories, URLs from all open tabs, Google search queries, and URL parameters that could contain session tokens or hidden user identifiers. Such information could later be exploited to hijack accounts or gain unauthorized access to other online services.

The attackers also employed deceptive tactics to maintain persistence. Fake legal documents and privacy policies were generated using AI-powered web development platforms such as Lovable to create an illusion of legitimacy. In some cases, when users attempted to remove one malicious extension, the system automatically redirected them to install the second one, trapping them in a cycle that was difficult to break without identifying the root cause.

This incident serves as a stark reminder of the risks associated with browser extensions in the era of generative AI. Even extensions that appear professional or receive official store recommendations can conceal harmful behavior. Security experts strongly advise users to carefully review permission requests, limit extension installations to essential tools only, and access AI services directly through official websites whenever possible to protect personal and organizational data.